Remote Sensing

Remote Sensing augments reclaimed oil paintings into an immersive multisensory experience. As users remotely interact with the paintings, the paintings respond with both auditory and haptic feedback.

Concepting

Installation floor plan

User Interface Concept

3D Mockup and Render

Interaction

A virtual set was created in TouchDesigner and calibrated to physical space. A grayscale interaction map was created and overlayed atop the painting. A Leap Motion was used to track the user’s hands and ray was emitted from the tracked index finger. This ray sampled the color value of the grayscale map and used the values to drive interaction and feedback.

Scan of the Physical Painting

Grayscale Interaction Map

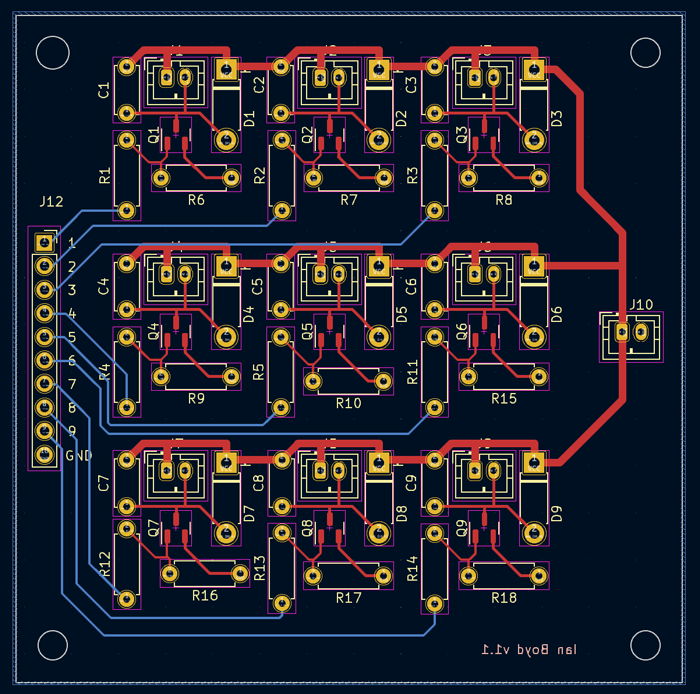

Feedback

Both haptic and aural feedback were created for the piece. Aural feedback was generated as pentatonic tones, while lingering on a section would play different ambient sounds. Pixel values were used to drive a fabricated 3x3 vibration motor array. The motors were controlled by an ESP32 microcontroller receiving UDP packets from TouchDesigner. The array is powered by a lipo battery and can be repositioned and carried around the gallery. NPN logic-level mosfets were used as switches and a custom motor driver was fabricated.

VST playing minor pentatonic tones in TouchDesigner

CAD render of the haptic enclosure

Haptic puck with cover on

Haptic puck open

Motor driver PCB sketch